The Long View

Warhol

They always say time changes things, but you actually have to change them yourself.

--Andy Warhol

It was confusing that computer monitors looked so much like television sets. It made it seem that it would be easy to get movies or television shows to play on your computer. But more than a decade passed between the time people started seriously trying to get that to work (about 1987), and the time it started working routinely (about 2000). Of course we do it all the time now. So why was it so hard, and what changed between then and now?

It was hard because it required very high data throughput. The way you want video is the way it is now, full screen at 24 frames per second or better. Full screen in 1987 meant 640x480 pixels. To render that size image in 24 bits-per-pixel color took over 900,000 bytes per frame. At 24 frames per second (the standard for film) this meant 22,118,400 bytes (21.09 MBytes) per second. The video had to come from your hard disk or from CDROM. There was a lot of excitement about CDROM at that time, but the CDROM drives at that time could only hold about 30 seconds of uncompressed video at that rate, and anyway, they could only transfer 150 Kbytes per second. It would take seconds to move a single frame from a CDROM into a computer's memory. Transfer rates were nominally better for hard disks, but for the most popular personal computer at the time, the IBM-AT and its clones, the hard disk could only move about 100-200 KBytes into the computer every second. This was nominally faster than a CDROM, but a far cry from what it would take to play a digital episode of The Cosby Show on your computer screen. Getting video playback on the computer would require a combination of advancements in computer hardware to move bits at the speed required and some clever software tricks that reduced the number of bits that had to move. In the meantime, while video consumers watched their favorite shows on analog television sets, many of those same television shows were edited and composed using computers. How did that work? Shouldn't video postproduction be a more demanding task than simple playback? What kind of computer were they using?

Video Editing

Television shows had been shot and stored on video tape since the advent of high quality tape recording devices in the 1950s. But editing videotape was much harder than editing film, and film continued to dominate in Hollywood. Moving pictures shot on film consist of a sequence of discrete still images usually shot and projected at 24 frames/s. Movie frames can be examined by eye, so editing film has always been a conceptually straightforward process. The editor examines pieces of film frame-by-frame and selects the correct places for splicing. The film is cut in the thin gaps between images and the pieces of film are glued together. The movie industry has used a variety of elaborate devices to facilitate the rapid examination of film clips to determine the ideal place for the cut, but the cutting process itself was so simple it could be performed by almost anybody.

My father was an 8 mm film enthusiast, and he shot reel after reel of Kodachrome Standard 8 to create a silent family chronicle of every birthday, Christmas morning gift-unwrapping, and family road trip. He had a small splicing machine that he used to concatenate the developed film from small 3" reels of film received after processing onto 7" reels for storage and projection. We watched his home movies in our living room in the 1950's and 1960's using a collapsible screen and an ancient noisy Keystone K-70 projector. Nearly any showing was accompanied by at least one film breakage that required on-the-spot splicing. In high school in the late 1960s, my friends and I used my Dad's 8 mm camera to shoot our amateur productions. We dissected our shots into tiny clips that we reassembled in what we imagined to be the montage style. With a small hand loupe, an X-Acto knife and my Dad's simple film splicer, we could make cuts as frame-perfect (if not as artful) as those in any Hitchcock film. But two decades later, in the late 1980's, high school students making films using their parents' VHS cameras had to adopt a very different cinematic style. They had sound, but despite their modern equipment, they had practically no editing options. They had to make their cuts by turning the recorder on and off, and stage their shots mostly in the order they would appear in the final tape. If they developed an interest in cinéma vérité, it was probably for the best.

The essence of video editing with tape decks is to have two recorders, one with your recorded material (the source), and one with a blank tape onto which you will accumulate your final version. You start at what will be the beginning shot of your production, play it from the source deck and record it onto the blank tape on the other deck. You stop at the place where you want to cut to a new piece of the source material. This you call the "out point" of that first shot. Then you cue up the source deck to the start of the material that you want to come next, the "in point" for that segment. You then record that second segment, adding it to the end of your linearly accumulating final assembly. Using this method, you can assemble your production, from start to finish, adding clips one at a time, cut by cut, in the order they will occur in the final version. Professional editors had equipment that could control video tape players, display time codes, and switch between sources. If they had a lot of tape players and a long list of time codes for in and out points of each cut, they could assemble an edited video by having the machine cue up each clip on a different source deck, and switch between sources to assemble the show. But even with this elaborate equipment, video tape editing was linear, in the sense that all cuts have to be made in the order they appear in the final tape. Linear editing would have been more tolerable if video tape recorders could play back video at a range of speeds, go backwards, and jog frame by frame, displaying individual frames and their time codes. If you could do that, an editor could go through all the material ahead of time, determine what material to keep and what should be left "on the cutting room floor", establish the order of clips and write down the time codes for the in and out points of each one. This process would not even have to be done in any particular order. An editor could select clips from any part of the production and figure out how the cut between them would be performed before decisions about the earlier parts were made. All of this would be "offline", because no final tape was being assembled. The clip names and time codes for each cut would be assembled in a list that could be handed to the machine that controls the various video machines. The assembly of the edited video, the "online" component, would then be done almost automatically, by cueing up the clips and programming the in and out points from the edit list into the equipment. All of this would have been possible, if those helical-scan video tape machines could have been played at varying speed, backward and forward, and made to jog frame by frame, displaying the image in each frame and its time code without damaging the tape. But they couldn't.

The dilemma this posed for the magnetic video recording industry in the 1970's and 1980's is described in John Buck's lovely and fascinating book Timeline. One of the many twists in the story is that the solution to the shortcomings of video on magnetic tape was another product from the same industry, the magnetic hard drive. Although video frames stored on helical scan magnetic tape (at that time mostly manufactured by Memorex) could not be accessed and displayed in both directions or at arbitrary speeds, video frames on magnetic disk drives (another Memorex product) could. Video frames stored on hard drives could not be accessed in full resolution at realistic frame rates, as they could from analog tape. But for purposes of just making editing decisions, the images could be smaller, have lower temporal resolution, or even be displayed in grayscale. Clips stored on disks could be cut digitally into pieces at any frame boundary and recombined arbitrarily. The product of this would not be a broadcast quality production, but rather an editing decision list that could be used to program existing online tape equipment to assemble the final cut. The ideas were there before the equipment was really ready, but the first commercial nonlinear video editing system of this type was built by a joint venture between Memorex and CBS television, called CMX.

The system used existing online technology for making the final tape, but included an offline editing component consisting of a DEC PDP-11 driving two CRT screens. One screen had a light pen user interface for entering commands. Video clips were converted to monochrome and loaded into an enormous early Memorex hard disk subsystem that looked something like a top-loading washing machine. To specify a cut, the preceding clip was displayed on the left hand screen. Although it was a crude rendering of the image, the user could scan through it at various speeds, jog frame by frame, all the things he could do with film but not do with video tape, to select the out point for that clip. The incoming clip was displayed on the right hand screen, and was likewise manipulated to find the desired in point. When that was done, the edit was stored in a text file called the edit decision list. When all the cuts were specified, the list was transferred to the online system which could create the full resolution final recording on video tape for broadcast. Watching the demo, the process seems easy, but not very versatile. It didn't do anything fancy. The images the editor was working with were crude, and to get the storage down and frame rate up, the system only displayed every other frame. And on top of that, the price tag for this equipment was a hefty $500,000. And remember this was 1972. This price was in pre-Carter dollars. The 2013 inflation-adjusted price-- about $2.5 million. The price was high because every part of this was ahead of its time, but especially the disk storage. This application required way too much space for the disks available at that time. Still, the CMX was a glimpse of the solution to a major problem in video editing. When hardware that could support it at a reasonable price became available, there would be a product that would make offline nonlinear video editing available to everyone. That hardware was the Macintosh II, and the product was Avid 1.

The Birth of Avid

The Avid video editor was not originally planned to be a Macintosh product. Bill Warner and most of his associates at Avid had been employees of the Apollo Computer company. Apollo did not make personal computers. They made high end computer workstations used by scientists and engineers. Apollo dominated the workstation market through much of the 1980's, and although they lost ground in the last years of the 80's, they and their Domain/OS based machines were greatly respected, especially in the field of computer graphics. Their competition was DEC and Sun, not Apple or the various makers of IBM PC clones. So Warner had not considered building his video editor on the Macintosh, which he assumed would be underpowered for the task. He had built a demonstration of his planned system on the Apollo, and brought it to the National Association of Broadcasters (NAB) show in 1988. The system was similar to the CMX, in that it loaded low resolution video clips from the original video tape and stored them on fixed disk storage. The difference was that it used mostly off the shelf computer parts. The most important component, the large array of fixed disks, was off the shelf but still pretty expensive at the time, and this helped to account for the still-hefty price tag, between $50,000 and $85,000, (depending on disk space). The system employed offline editing to make an edit decision list that would be used by an online system to make the final production. According to Warner's own account, Avid attended the NAB show in 1988, not as an exhibitor, but to show their demo in a private room to collect the impressions of professional editors. At that point the user interface for the system had not been finalized. In fact, they showed two different user interface systems in the demo at the show, one using the standard source and record windows (like the source and record monitors of video online editing and the CMX), and one that used a filmstrip interface, meant to emulate film editing. Both approaches had advantages and advocates. In the end, the Avid system would support both approaches to editing. Nearly all subsequent products have continued to do the same.

Avid Moves To Macintosh

Avid was an exhibitor at NAB the next year in 1989, but the system they showed there did not run on the Apollo workstation. It ran on the Macintosh II. Between annual NAB meetings, Warner had taken the Apollo-based Avid prototype to the SIGGRAPH meeting in Atlanta in 1988. It was well-received, but one of the people who saw it was Apple's multimedia project manager Tyler Peppel. Warner says that Peppel approached him and said he should move the editor to the Macintosh II. Warner didn’t expect much of the Macintosh. The prevailing view in the technology press was that the Macintosh was a cool idea, a fun but underpowered toy that was not ready for serious work. Warner certainly did not expect that a $6,000 personal computer with a single job operating system would be a match for the much more costly multiuser Apollo workstation. When the Avid team returned home from the show, they found large packages waiting for them, containing Macintosh IIx computers. Peppel had taken the initiative to ship them to Avid, to convince them to try moving their (not yet finished) software to the Macintosh. This was a bold move. I wonder how often things like this were done. I have never heard of another single instance. It was a risky but brilliant move that delivered an entire market to Apple.

Apple also sent an engineer, George Maydwell, to help the Avid programmers get started with the Macintosh. There were two bottlenecks in video editing software. One was the speed at which video frames stored in memory could be drawn to the screen, and the other was the speed at which frames could be moved from disk storage into memory. Video frame rate is still a familiar problem for high end video gamers, who measure their systems by how many full frames of imagery that can be drawn to the screen every second. Avid was using images much smaller than full screen. This was required to get to the desired frame rate, as video systems were slower then. Video cards, like hard disk controllers, communicated with the CPU and main memory over the I/O bus. Apollo at one time had used the VME bus, which was popular for workstations (also used by Sun), but in the late 1980's they were using the AT bus used by IBM PC-AT clones (to take advantage of the PC peripherals market). The Avid engineers were able to write reduced-size images to the video monitor on the Apollo at about 9 per second. Their target for the shipping product was 15. They hoped ultimately to achieve 30 frames per second, which was approximately the speed of NSTC television productions. George Madwell did some tests with the Macintosh IIx. He got the Mac to write frames of the same size to its screen at 45 frames/s. I'm sure this blew Warner's mind, but the disk tests were even more surprising. Disk drive manufacturers at that time claimed maximal read speeds of about 1000-1500 Kbytes per second. But in practice, Warner and his team were getting about 200k bytes on the Apollo. When Avid engineer Eric Peters tested a Macintosh IIx they had been given, they were shocked to get read speeds of about 1200 Kbytes/s. This was 6 times the speed they got on the Apollo, and practically the same as the disk manufacturer's best-case claims. This was even more astonishing because they did not need any special hacks to get these speeds. They got them using standard calls to the Mac OS (System 6) file manager. This file system performance that was available to any Macintosh programmer at the time, using the normal system calls for reading and writing data from disk. Warner and his team decided to leave the Apollo behind, and move their system to the Macintosh, with only months left before it had to be ship-ready to show at the 1989 NAB show.

Why was the Macintosh so much faster than the Apollo? What kind of performance would Warner and his programmers have seen if they had tried their tests on one of the high end IBM-PC AT clones available at the time? No need to guess. The Apollo's performance was inferior because it was using the PC AT bus, both for disk access and video. The miserable disk performance of the IBM-PC AT was well documented, if not widely appreciated. For example, in January 1987 Byte magazine ran the results of tests performed at Arizona State University, comparing specs of 12 high end IBM AT-type computers. The tests included a hard disk sequential read test. This is best case performance for disk access, in which the computer reads data stored contiguously on the disk. It is the test you care about if you need to read a big file into memory. It took 1.82 to 3.92 seconds for the tested machines to read a 512 KByte file. This is 130-270 KBytes/sec, within the range Warner says the Avid group were getting on their Apollo machines at about the same time. Of course they were. What else could have happened? The Apollo used the same AT bus and disk controllers. This was a selling point for Apollo (or at least they thought it was) because it meant Apollo customers could use PC-compatible disk systems. Disk drives at that time claimed around 1,500 KBytes/s, about 10 times what the PC (or Apollo Domain/OS) user could hope to get. Of course, no Apple computer was included in the test results published in the Byte Magazine article. But in 1987 the Macintosh II (and most other Macs) achieved 1.25 MBytes/s from disk, because of their integrated SCSI controller. SCSI read speeds increased to 5 MBytes/s for the Quadra family, starting in 1991. Remember that the PC clones also accessed video using the same ISA I/O bus, which was 16 bits wide and ran at 8 MHz. Video frame rate on the Macintosh II had the advantage of using an advanced I/O bus, called NuBus, which was 32 bits wide, ran at 10 MHz, and so could theoretically move about 38 MBytes per second. In 1987, IBM tried to fix the bottleneck caused by their ISA bus, replacing it on their PS/2 line of machines with a new 32 bit 10 MHz bus architecture, called Micro Channel. Micro Channel offered Macintosh-like performance on the new line of IBM personal computers. But PC users did not buy the new IBM systems because they were proprietary, too expensive, and because they were not sufficiently compatible with the I/O boards they had already bought. Ironically, those I/O boards were the problem. PC users in subsequent years spent a lot of money buying the newest fastest disk drives, never knowing that they were getting little or no benefit from the improvement in the speed. During this period the computer press, most notably Byte magazine,did not publish performance comparisons between IBM clones and Macs. They claimed that the systems were so different that there were no suitable benchmarks that could be used. The IBM-PC AT architecture lived on for years and its shortcomings went mostly unknown to the purchasers of these systems. The disk and video bottleneck of IBM-PC AT and its clones would not be cured until the introduction of the PCI bus in 1993. During the period from 1988 to 1993, the PC AT clones were preferred by businessmen and journalists for spreadsheets and word processing, but the Macintosh monopolized the personal computer excitement in I/O-intensive tasks like video editing. It was good luck for Apple that Bill Warner and his team worked for Apollo, and not for Sun.

No Help For You

The first video editing systems for the Macintosh, including the Avid 1, were programmed without the benefit of any operating system support for video. This was not unusual. Programmers in those days didn't expect much help from libraries or OS-provided facilities. This may sound odd to today's programmers, who usually assemble their software from a set of libraries that do the bulk of the hard work. But nobody had thought much about video editing on computers before; this was unexplored territory. That meant the Avid 1 was written from the ground up, including the creation of a disk format for video data, user interface code, and the design of the user experience. The fact that the basic Avid system has been in large part copied by everyone since then is a tribute to the choices they made.

If the video editing software business had followed the usual pattern, other programmers would have tried to reverse engineer Avid's document formats, to make "Avid-compatible" systems, and would have had to write all the code for all of it from scratch. This would make coding a work-alike competitor to Avid almost as big a coding job as writing the original (except for the creative part). It didn't happen that way, because Apple created a complex and capable API for programmers wanting to write video editors. Quicktime included a file format and libraries for reading and writing files and putting video data into memory. But it was a lot more than that. It was a complete kit for making a video editing program. Video and audio clips in memory were maintained in separate parallel tracks, with in-point and out-point specified by subroutine calls. Few application programmers had ever enjoyed this level of operating system support specialized for making their particular kind program easy to write and maintain.

Video Everywhere

Why? I mean why would Apple go to so much trouble to help a handful of applications programmers make a very specialized program, at least one of which had already been written without their help? Video editors were (still are) a tiny market. The immediate profit for Apple was small, and I'm sure there were voices at Apple asking how they were going to make money this way. Nonlinear video editing was a big deal in television, but Apple's take on each video editing workstation sold was pretty small. Out of the $85,000 a customer spent on an Avid, Apple's take was less than $6000.

It was a wise piece of foresight but not one unique to Apple. They, like everybody else, knew that before long video would not be just for television. Video would soon be delivered directly to the computer for playback, bypassing video tape, broadcast,and even the television set. Video would not be just for entertainment, but would become embedded in everything from income tax preparation programs, educational programs for kids, and scientific and technical manuals. Eventually videos would be embedded in essays and eBooks, and electronic magazines.

The video creation and editing capabilities built into Quicktime allowed ordinary users to use video as just another media component in a document. And eventually every family would have a video enthusiast taking footage of every child's first steps and birthday parties, vacations, weddings, and even births. Hopefully, all that video would be edited before being seen, and it would require a lot of editing.

But to do that, video needed to work on any Macintosh, not just ones equipped with an I/O bus and special video boards. And there was a whole list of technical problems that had to be solved to do that, especially on a computer architecture that was not designed with video in mind. The first problem was just timing the display of video frames.

Real Time

It is not a coincidence that the CMX system used a PDP-11 running its RT-11 operating system. The RT in RT-11 stood for Real Time. RT-11 was programming environment designed for tasks that had to happen on schedule, like control of machine tools or scientific instruments. Boards installed in the I/O bus of a PDP-11, including timers, usually worked by interrupting the ongoing program. When an interrupt happened an application-specific special piece of code, called an interrupt service routine, would be called immediately. Immediately means that it was guaranteed to run with no greater than some specified short latency regardless of whatever else the computer was doing. The designers of RT-11 assumed that applications would communicate with the outside world via interrupts, and that applications programmers would write interrupt service routines and install them as a part of their applications. They did, but PDP-11 programmers mostly wrote specialized in-house software to work with a specific set of I/O boards, and RT-11 ran only one program at a time. The Macintosh came with all of the hardware and software support needed to do real time computing. It even had programmable timers built right onto on the logic board (the Versatile Interface Adapter chips) starting with the first model. The processor had flexible support for interrupts and interrupt service routines, including timer-driven interrupts. But the information-appliance design of the original Macintosh did not anticipate I/O expansion by users or programmers. The capabilities of all of its real time machinery were already claimed by the operating system designers for real time tasks like tracking the mouse, drawing video on the screen, making sounds, and operating the serial ports and disk drives. To keep the costs of the Macintosh down, no capabilities were added that were not needed for these built-in functions, and applications programmers were not expected to mess with any of that stuff. In the original Macintosh (the 128k and 512k models) with the 64K ROM, there was no operating system support for programmers who wanted to do precisely timed tasks. The operating system was designed to optimize graphics and disk performance and to respond promptly to the user, but not to offer reliable timing of anything else. Some programmers had to time things anyway, and used the only mechanisms available. These consisted mainly of the tick count and vertical blanking interrupt. If you just wanted to see how long something had taken to do (like the time between mouse clicks), the tick count specified the time to the nearest 1/60 of a second, and was available as a Low Memory Global, called Ticks. Application code that needed to run periodically could be attached to the vertical blanking interrupt, which was a real interrupt that occurred reliably 60 times per second, during the vertical retrace of the compact Macintosh's built-in display. You could ask the operating system to execute some small piece of your code after it had done the rest of what it needed to do at that time. Early animation programs had used the VBL method to update video images. It not only guaranteed reliable timing, but it also prevented flicker when updating the screen, because the code executed while the video CRT's electron beam was blanked. But every 1/60th of a second (or an integral multiple of that) was too inflexible. Programmers had tasks that had to be on time, but go faster or slower than that.

With the introduction of the MacPlus and it's advanced (128k) ROM, some help was provided for programmers wanting to execute code with precise timing. One of the two timers in the Macintosh had been responsible for timing the disk drive. The new 800k sony disk mechanism did its own timing. Apple turned the use of that timer over to programmers. The original time manager, introduced at that time, gave programmers a safe method for using the built-in timers on the Macintosh logic board to execute a subroutine with some fixed delay, or to execute them repetitively at some fixed interval. And it queued timing tasks so multiple programs could use the timer.

The Time Manager

The original Macintosh design included two high resolution timers that could be used to interrupt the CPU. They were part of the Versatile Interface Adapter. Both timers could be loaded with a 16 bit value, and would count down to zero, at which time it could generate an interrupt. The clock generating the countdown signal for the VIA timers was the Enable Clock, which ran at 783.36 kHz (1/10 the processor clock) so counted down by one each 1.276 µs, and could schedule interrupts over a time span of about 83.6 ms. Timer 1 on VIA 1 was used to time sound generation. Starting with the Macintosh Plus and 512Ke timer 2 was available for use by the Time Manager. Later Macintosh models added a second VIA, and changed the way the Enable Clock worked, but the Time Manager kept using Timer 2 of VIA 1 and kept the 783.36 kHz countdown speed, independently of changes in processor speed. The Time Manager was originally written by Gary Davidian. It allowed a programmer to install an interrupt service routine into a queue, and then request that the routine would be run after a specified amount of time had passed. The queue could hold lots of routines, which could be installed by different programs, and they were stored in order of scheduled execution. The timer was set to expire at the time requested by the routine scheduled to run soonest. The original version had a minimum delay interval of 1 ms, i.e. the delay time was specified in milliseconds. Times were represented internally using a 36 bit number, so delays up to about 24.3 hours could be requested. In a later version, time delays could be specified in microseconds by entering a negative value for the delay. In that case, the maximum delay was about 35 minutes. Of course, executing the code to set up a timed execution took some time, and the Time Manager had to subtract that from the delay. This was not designed with Quicktime in mind. For Quicktime, an additional modification was made to prevent accumulation of delays caused by reinstallation of repetitive timed events after each delay expires, as occur in playing video frames. In the Quicktime-era version of the Time Manager, super-simple repetitive tasks could be reliably timed to occur at astonishingly brief delays (20 µs). Other popular brands of personal computer had no comparable support for precise timing of code execution.

It was essential to be able to flexibly time drawing frames on the screen, not just at one speed (as in playback), but at any speed (as needed in editing). But there was a lot more that had to go into making an API for video editing.

Quicktime Does it All

These days it is common to expect the operating system and libraries to do a lot for you. But Quicktime was way more than anybody was accustomed to at that time. It made it quick and easy to write a program to find a Quicktime file on disk and play it on your computer, or to incorporate playing movies or recorded sound in your existing program. It made it possible, even pretty easy, for programmers to support cut and paste for video data between windows or documents, and even to store video data in the clipboard or scrapbook for use by other programs. The most surprising thing about Quicktime was the built-in user interface features. This kind of thing was not common. Libraries sometimes did a lot for you, but they never seemed to help with user interface. Quicktime gave all programs a standard set of user interface items, for controlling playback, selecting digitizers and compression algorithms, and setting the host of parameters that controlled the complex problems of video digitization and coding. It would even create a window and provide a full set of controls for the user to play video. and could handle all events in its windows. But that was only the beginning. Quicktime was basically a complete library for creating video editing programs. It included standard code for selecting a video digitizer, copying and pasting movies, even setting a selection in a movie (effectively setting in and out points for an edit), and cutting or copying only that selection. It included built-in support for superimposing styled text on movies, and even for scrolling text to make titles and credits. All that made it much bigger than other Apple APIs at the time. In fact it was not one API, but rather a collection of them. To make a movie on a computer, there are a number of things that need to be there. First, there has to be a way to digitize analog data. In 1991, all video was stored on analog media. Apple did not provide the video digitizer cards that would be used to capture analog or video. They would later make computers that had this capability built in, but Apple could not possibly ever control the video digitizer market. They had to abandon their usual vertical approach to hardware and software integration for video. The usual alternative to total control control (which people called "open") was to let each of the manufacturers of video digitizers worry about writing the software to work exclusively with their hardware. Apple’s solution was to write a framework that would allow hardware manufacturers to build small hardware-specific software components, comparable to drivers, that would deliver data to Quicktime in a standard way. Unlike a classic driver, these software components had to work interactively with the user, so the user could control the various parameters of the digitizer (e.g. video resolution, bits per pixel, frame rate). Ideally, this kind of user interaction would would look about the same for all kinds of digitizers. So Quicktime included a protocol for video digitizers that relieved them of the job of interacting directly with users. This also solved a problem for application designers. Because they didn’t have to write the code that interfaced with the digitizer board, they didn’t have to know much about it. If your program worked well with Quicktime, it would work with practically any video digitizer board. Users could swap out digitizer boards and their existing video software would work without change. To create this kind of arrangement, Quicktime had to have a plug-in architecture. Apple created a general purpose plug-in architecture, not exclusively for video, originally called the Thing Manager (later renamed Component Manager). Video digitizers could come with one relatively small piece of software, a video digitizer component ('vdig'). Quicktime would look for a video digitizer component and its hardware, and would give any Quicktime application access to the digitizer.

Most video cards at that time represented pixels using 8 bits. The way colors mapped to the 256 possible pixel values was flexible, but if you were going to display any one picture, you had only 256 colors to do it with. To represent full color images, colors were dithered, mixing an unmapped color for the eye by putting a cluster of other colors next to each other on the screen. If you were Avid writing their first video editor, you had to figure out how to do this yourself. After Quicktime, programmers could be almost unaware of this entire process. At most they had to select a dithering scheme from the options Apple provided.

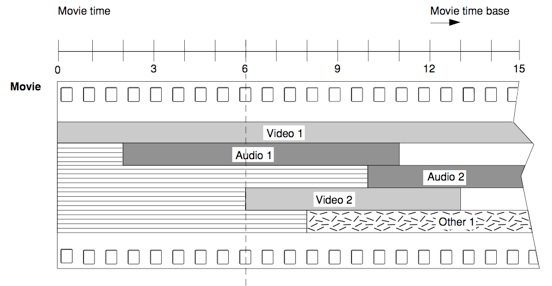

Finally, Quicktime provided an API for storing, reading, display and editing of multimedia data, called the Movie Toolbox. The Movie Toolbox viewed a movie as a containment hierarchy. This kind of thing was the rage at Apple at that time (see, for example, AppleScript), and was a reflection of the growth of object-oriented programming. The top level container is a data structure called Movie. But the Movie is just a data structure, it doesn't have any of the actual pictures or sound in it. The Movie contains data structures called Tracks, which represents independent streams of data, for example sound channels or video clips. Tracks are synchronized by the Movie's time base, so they are directly comparable to the Tracks of data shown in the timeline of a video editing program. But the Tracks are also just data structures. They don't contain any of the actual sound or video data. Tracks are associated with Media, which also don't contain the data, but do have a direct reference to the data, which are stored in a disk file or in memory. The Media know how to find the data for use by Tracks and Movies. The Movie file itself contains the data to populate all the data structures. It does not necessarily contain the actual sounds or pictures of the movie. It is possible to tuck everything into one file, but it is not necessary. This can be a serious space-saving benefit. An editor might have multiple movies that use the same data, and the space-eating data do not have to be reproduced for each Movie.

Also reflecting the trends at that time, Apple made all these various data structures opaque. The programmer wasn't supposed to try to manipulate them as data structures or access any of their secrets directly. Everything was done by subroutine calls that operated on the data structures. You started by creating a Movie object or reading it from disk, and then adding tracks to it, or querying the tracks it contained to get their attributes. If you wanted to do something low level, it could seem pretty complicated. For example, if you want to access a single frame from your movie, you have to work your way down from the Movie to the Track to the Media, from which you can find a reference to your frame (called a sample). But it was amazing how easy it was to write a media player. In a few minutes, you could imbed a video player into your application, including support cut and paste for videos. The code to do this was supplied in lots of sample code. Supporting conversion of video formats and even digitizing video in your application came along with no effort. While Microsoft was busy just trying to make a media player for their users Quicktime made construction of a video player a good first project for beginning programmers. Looking at the Quicktime made you want to write a video editing program, and a lot of people did it. But nobody did it as many times as Randy Ubellios.

Randy Ubellios and Premier

Acquiring video required digitizer boards, and the companies that made video boards for the Macintosh were making better and faster ones. One such company, SuperMac, was working on a NuBus-based video digitizer called VideoSpigot that they envisioned as a no-fuss way to capture an analog video stream to the Macintosh II series of machines. The key to storing video to disk was compression, and SuperMac developed their own codec, which they called Cinepak. The board came with a program called ScreenPlay that was used to capture video and play it back. This was before Quicktime was released, and they did not have a 'vdig' component available yet. SuperMac wanted to give their customers a program to do some simple editing, in addition to capturing and playing back video. Randy Ubellios, who worked for SuperMac at that time, wrote a program called ReelTime for use with files created by ScreenPlay and VideoSpigot. ReelTime did not control the digitizer but it could take files created by ScreenPlay and could do cuts and transitions and put them together, display the result, and write it to disk. ReelTime was meant to be bundled with the VideoSpigot, but SuperMac was a hardware company. They needed their customers to have a program like this, but they didn't need to be the ones to sell and maintain it. There were lots of potential buyers for ReelTime, but it ended up selling to Adobe and becoming their first video software product. It was renamed Adobe Premiere, and was bundled with the VideoSpigot and available alone starting at the end of 1991. It came out about the time Quicktime was released, and although not really a Quicktime product, it did use the Quicktime file format and so was Quicktime-compatible. Luckily for Adobe, they also acquired Randy Ubellios, and Premiere version 2 appeared at the end of 1992, including full Quicktime support, with video capture, scrolling titles, and filters. Premiere was a very cool program but it was conceived before Quicktime was released, and its feature set was determined independently of what Quicktime could do.

Ubellios was not the only person writing video editors that made use of Quicktime. There were a lot. My favorite video editor of that period was VideoFusion. I think the authors of VideoFusion just wanted to create a tool for exercising every possible feature of Quicktime, even obscure ones you didn't know what they were good for. Quicktime (and VideoFusion) had matrix-based viewing transformations, so you could rotate, stretch and warp moving pictures. It allowed masking and compositing, blending and chroma-key, morphing and logical operations between images, and crazy color look-up table operations that could make your home movies look like something from Frank Zappa's 200 Motels, which was the first feature film (if you can call it that) to be shot on videotape.

Ubellios went on to write the next two versions of Premiere, which proved very popular, and remained involved with Premiere as it was ported to Windows as soon as the PCI bus made that practical. Later, Ubellios got interested in writing another Quicktime based video editing program, this time for Macromedia. The program, called KeyGrip, was completed but Macromedia lost interest and sold it to Apple, where it was marketed as Final Cut Pro. Ubellios, now with Apple, was also the force behind the innovative (and controversial) iMovie '08. He continues to be the face of Apple's video editing efforts, including the (also controversial) Final Cut Pro X. It is remarkable that so much of the code used for video editing comes from this one programmer, who had no prior experience as a video editor.

Quicktime for Windows

The Macintosh was where video media was created and edited, but who was going to watch it? For better or worse, most people had IBM PCs. And there was no agreement on file formats or compression algorithms, and no standards for playback. If Quicktime was going to work, it was essential to give Windows users a way to play Quicktime movies. Apple contracted a company, San Francisco Canyon, to create a Windows 3.x player for Quicktime version 1. At this time (1992) Microsoft was thrashing in their attempt to settle on a video technology for Windows. They released Video for Windows but its performance was disappointing. Later VfW would be improved. It was in fact improved by San Francisco Canyon, who were hired by Microsoft and Intel to do the job. They did it, but they did it by using code they got from Apple. Apple was able to block the theft of their Quicktime code, adding to Microsoft’s woes in delivering Windows users a video solution independent of Quicktime and Apple. Microsoft would abandon VfW, which never really worked right without Apple’s code, and replaced it with something called ActiveMovie, which they later renamed DirectShow. None of this ever matched Quicktime functionally. While Microsoft was busily renaming their failing projects, Apple continued to develop the Quicktime environment, expanding its capabilities on Windows to include authoring and editing, as well as playback.

You might think that because of its size, it would be a huge job to port Quicktime to Windows. The way Apple chose to do it sounds even worse, but was in fact easier and smarter, and paid off in ways that could not have been predicted at the time. They ported a significant piece of the Macintosh API to Windows, and left the Quicktime code mostly unchanged. This meant that new versions of Quicktime could be moved to Windows with minimal change. As their Windows version of the Macintosh execution environment was refined, more and more Apple code could be ported. This portable version of the Macintosh application environment, running in Windows, was called the Quicktime Media Layer. It wasn’t only available to Apple Programmers, but could be used by anyone wanting to write a Quicktime program on Windows. It gave Windows programmers access to the Macintosh Quickdraw drawing API, the GWorld offscreen bitmaps, much of the Memory Manager, File Manager, Resource Manager and other features of the Macintosh toolkit. It not only made it easy to move video editing programs to Windows, but effectively became a cross-platform programming environment for Macintosh programmers hoping to break into the Windows market. The user interface features of Quicktime used the Windows user interface calls, so Quicktime Media Layer Programs looked completely like native Windows applications. Obviously, Apple didn’t want to encourage Macintosh programmers to port their programs to Windows, and didn’t make a big noise about this at the time.

This was happening at the same time that Apple was creating a Macintosh environment for programs running in its own Unix OS, called A/UX, and the Macintosh Application Environment for running Macintosh programs in other Unix environments. For Apple, the Quicktime Media Layer and the team that created it, led by Paul Charlton, would later prove an important resource, forming the core of the Carbon API for Mac OS X. I imagine it was also key in porting iTunes to Windows, which was huge, but that’s another story.

After Quicktime

Quicktime was written for the old Macintosh operating system, and the Quicktime Media Layer was a port of parts of that environment to Windows. All of that is obsolete. But if you look at the QuickTime guide for Windows on the Apple Developer site, you get documents dated 2006, and they describe the old QTML, with it’s emulation of the classic Macintosh programming model. Quicktime was an Apple Corporate Treasure that had no counterpart in the NeXT world. Like lots of other MacOS code, it was Carbonized and kept, but never ported to the NS programming model. Of course, Cocoa programmers could, and did, call Quicktime directly from Objective-C. Apple made Cocoa wrappers for some of the most commonly-used Quicktime functions in a framework called QTKit, and for a decade it seemed that the entire Quicktime library might eventually get incorporated into QTKit. If that happened, you could hope it was being rewritten in Cocoa, and might be kept for the long haul. But when iOS acquired its own video framework, the AV Foundation, the writing was on the wall. AV Foundation is now the recommended way to do audio and video on OS X as well. Quicktime remains, but like all Carbon code it did not make the 64 bit transition. At the time of this writing there is no announcement that Quicktime (or Carbon) are to go the way of OpenDoc, but there is no doubt that Quicktime’s days are numbered. I’m sure AV Foundation is great, and Quicktime’s code is full of clever solutions to problems that don’t exist any more. But it will be sad to see it go.

BG -- Basalgangster@macgui.com

Sunday, November 3, 2013