The Long View

MoreMasters;

All problems in computer science can be solved by another level of indirection... Except for the problem of too many layers of indirection

--David J. Wheeler.

In small computer systems popular in the 1970's and 1980's, such as the Apple II and IBM PC, management of the memory space used by a program was mostly left to the programmer, or to the designers of the computer language. These computers were designed to run one program at a time. The programmer had access to all, or nearly all of the physical memory installed on the computer and was responsible for keeping track of where data were stored. Popular programming styles for these machines, including assembly language, BASIC and FORTRAN, used static memory allocation. An important part of programming for small computers was the design of a clever plan for the layout of data in memory, and memory allocation was performed when a program first loaded. The amount of memory required to run a program was an important figure of merit (less was better). Computer owners added memory to their systems as their budget priorities allowed. As your program's memory requirements shrank, your potential market expanded. Programmers reused memory allocations when possible to reduce the total memory required. For example, a FORTRAN programmer would dimension an array of real numbers large enough to hold the largest list of numbers his program would require. When that list was not needed in memory, the same named array could be reused to hold other lists of the same size or smaller. The programmer had to keep track of what was being stored in that memory space at any particular time. The array remained in that position and size in memory throughout the life of the program. The sizes of these storage spaces became part of the program’s specifications. For example, a spreadsheet program might specify the maximum number of rows and columns it could accommodate.

Finding enough memory to hold everything that was needed at the moment of greatest need during the life of the program could be a major challenge. When I was an undergraduate, a Master's degree student working in the research lab where I was studying explained how he could complete his thesis project and graduate if he could just rearrange things to find 8 more words of memory for the PDP-12 computer program he was writing as part of his project. He seemed very worried about it at the time, but he must have found them, because he graduated a few months later.

The PDP-12 came standard with 4K 12 bit words, and could be expanded up to 32K. Computers of the same type could have varying amounts of memory because their memory addressing space exceeded the amount of memory most buyers could afford. The address space is determined by the width of the register used to address memory. The PDP-12 had 12 bit registers, and so could specify 212 different (12 bit) memory locations. To address memory locations outside this 4096-word addressing space, it used a 3-bit data field register to select one of 8 4K memory banks. A single logical memory address might point to as many as 8 different physical memory locations, depending on the value stored in the data field register. It was up to the programmer to program the DF register to select the correct bank before reading or writing to a memory address. Data not needed in memory at any one moment could be written to DECtape, and read back in later. You had to decide what should be in memory and what should be on tape at each moment of the program’s execution. There were no operating system facilities to help with the decision.

A major shift was underway in the early 1980s, with the popularity of the Pascal programming language. Dynamic memory allocation was not new, of course, but it had been mostly a feature of experimental systems and advanced programming languages (like lisp and Smalltalk). Pascal included a facility for managing a large block of memory (called the heap), in which allocations could be made and released throughout the course of a program's execution using the functions new and dispose. Memory was reused without explicit planning by the programmer. When a block of memory was required, it was requested using new, and if space was available, the system returned a reference to the allocate space. When the program no longer needed that space, it could be returned to the pool using dispose. That memory could then be reused as a part of some subsequent allocation. Of course this feature, and even more sophisticated memory systems, are commonplace now. In the late 1970's, there was a movement at Apple to use Pascal internally for programming, and to provide Pascal to Apple's customers. This was largely due to the effort of Jeff Raskin, who had been on the faculty at UCSD, where a portable Pascal system for small systems had been developed. One of Raskin's former students at UCSD, Bill Atkinson, was influential in the design of the Lisa operating system, and the Lisa was built specifically to be programmed in Pascal. The Lisa Pascal system (like Apple’s Pascal for the Apple II) provided a primitive form of dynamic memory allocation, that worked a little more like a stack. You could allocate space on the heap using new, and if space was available it would be allocated at the first free location on the heap. But you couldn't dispose of any memory block you chose. Allocated blocks were maintained in a series. To release a dynamically allocated memory block you also had to release all blocks that were allocated after it. This approach required that dynamic memory allocation to be arranged in a careful order. To be most efficient, memory had to be released in reverse order of allocation, which is not necessarily the most natural order. On the other hand, the Lisa heap never got fragmented. You couldn't dispose of any allocated block in the middle of the heap.

Heap Fragmentation

The biggest problem with more flexible dynamic memory allocation was heap fragmentation. Usually, new allocations are placed in the first available free block of memory of the requested size or larger, and memory blocks can be disposed in any order. If blocks of memory are not disposed in reverse order of their allocation, blocks of free memory will become available in the spaces between blocks that are still in use. The size and placement of these gaps in memory is hard to predict. If you then need to allocate a new block of memory that is even one byte larger than the largest of the free blocks, space cannot be found and the allocation fails. There could be lots of free blocks, whose combined size is much bigger than the space you need, but that doesn't help. Your program is effectively out of memory as far as that allocation is concerned. The whole point of dynamic memory allocation is to be able to allocate space in any order, and not have to worry about exactly where it is going to go relative to everything else. If a program is going to allocate memory in response to a user’s actions, pretty soon the heap is going to be a mess.

The Macintosh Memory Manager

I emphasize all this history about memory management on small computers to explain how revolutionary the Macintosh Memory Manager was. Of course, I’m not saying that Bud Tribble, Andy Hertzfeld and Martin Haeberli invented dynamic memory allocation. This kind of thing had been done before. It just wasn’t usually available as a standard operating system feature of personal computers. It certainly was not available on the Apple II or the IBM PC, the Macintosh’s main competition. The Macintosh implementation was especially ingenious and efficient. Of course, because it was new, it was also unfamiliar to most programmers. To use it well required some patience to read the documentation and understand how it worked. To read about it now, you would get the impression that the Macintosh memory manager was a weakness, rather than a strength. That impression would be wrong. Every part of the operating system was enhanced by it, and no Macintosh program needed to ever suffer from heap fragmentation.

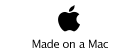

The Macintosh initially had 32 bit memory address registers, but only 24 bit memory addressing, so the upper 8 bits of memory addresses were not used. In System 7, memory addressing expanded to 32 bits. Even 24 bits could address more memory (16 MBytes) than could be afforded by early Macintosh owners. Macintosh programs addressed memory directly. By that I mean that the logical memory addresses and physical addresses of memory were exactly the same. Programmers could have their programs query the operating system to find out how much memory was available on the machine at run-time, and could adjust memory allocation accordingly. The memory occupied by a program was divided into three general areas. One was the global address space where static memory allocations of the old-fashioned kind were stored. The size of this global address space was decided when the program loaded, and stayed constant throughout execution of the program. Included in this were the Quickdraw globals, jump table, and the programs parameter area, all required by the operating system. Global data were stored relative to register A5, and were located at the top of the program’s memory addressing space. The second division of memory was the stack, used for storage of activation records of subroutines and for their local variables. The stack grew from the top down. Its starting (highest) address was available to programmers in the system global variable CurStackBase, and its current lowest address was stored in register A7. The rest of a program’s memory space was allocated to the Memory Manager to use for dynamic memory allocations. The beginning of the heap (it’s lowest address) was available to programmers in the system global variable ApplZone. As memory was allocated in the heap, it would grow up in the direction of the stack. In the original Macintosh operating system, space for code was allocated in the heap. Blocks of code called segments were loaded and unloaded into the heap in the same way as data. The Main Segment of memory remained locked and resident at all times. Other code segments could be unloaded when not needed, and could be relocated, just like any other block of memory loaded on the heap. Above and below the program’s heap zone, the logical (and physical) memory space was occupied by operating system resources like the video and sound buffers, the toolbox ROM, the system heap and low memory globals.

How To Do It Wrong

It was a great scheme, but if you wanted to, (or if you didn’t know enough about it) you could screw it up. You could use NewPtr. Why even have NewPtr? Isn’t that just asking for trouble? There were valid uses for NewPtr, for example, in porting programs from other systems that didn’t have relocatable blocks. Anyway, you empower programmers by giving them new and safer ways of doing things, not by taking away the dangerous mechanisms that require special care. And the Memory Manager did a great job of incorporating use of NewPtr into it’s plan for memory management. Whenever you used NewPtr, the Memory Manager would try to move some or all relocatable blocks up so that the new non-relocatable block could be placed as low as possible on the heap, where it could safely stay for a long time without getting in the way. This often made it easy to use NewPtr early during execution of a program to allocate memory that would stay resident for a long time, and have it not interfere with subsequent the dynamic allocations. But mixing of NewHandle and NewPtr allocations over a period of time would almost certainly result in the placement of nonrelocatable blocks that would partition the heap into separate pieces. Compacting memory would collect as much free space as possible within each piece, but no more.

Nice and tidy heaps as seen using System Watch by Joe Holt.

Other parts of the Macintosh operating system used the Memory Manager and allocated stuff in the same heap as you. Resources were loaded into the heap when you used GetResource, or when the Menu Manager or Window Manager or List Manager or who knows what other manager used the Resource Manager to load their stuff into memory. Usually, you didn’t think too much about what the Resource Manager was doing to your heap, till you looked at it using some utility like ZoneRanger or Swatch. Of course, this was the payoff, because the whole idea of the Memory Manager was to allow all those other parts of the operating system to get chunks of memory that they needed when they needed it to respond to user actions. They were written by people who understood the Macintosh Memory Manager really well, and they were very well behaved. But these things had their quirks. The Resource Manager kept a copy of the Handle to all resources in memory, and might release that memory. It didn’t know whether you were using that Handle at any particular time. If you kept a copy of the Handle of a resource, there was a chance that it could go stale when the resource was purged from memory. It was best not to hang on to Handles that belonged to resources, but rather to ask the Resource Manager for a fresh copy whenever you needed to use it. All this was written in Inside Macintosh, but what programmer wanted to sit around reading when there was code to be written?

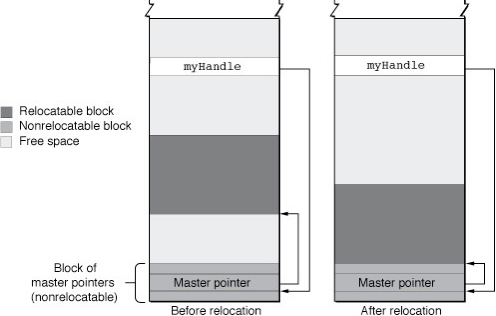

The block of Master Pointers needed to be as big as the maximum number of all the Handles used at any one time during execution, including the ones used by the Resource Manager and everybody else. If you ran out of Master Pointers, a new block would be created by the Memory Manager. This would be a non-relocatable block, and if the heap was pretty loaded up, it could end up being placed pretty high in memory. You could ask for another block of 64 Master Pointers with the call MoreMasters. It was best to do this first thing during program initialization, before the heap got all messed up. If you were going to have a lot of Handles, you needed to call MoreMasters several times. You probably didn’t know how many Handles you were going to need.

When You Ran Out of Memory

The Memory Manager was brilliant, but it could not give you more memory than was there. Sooner or later you were going to run out of memory. The user of your program could just keep going to the File menu and opening more files. Each one was going to need a window and buffers for data. Maybe the user would open a bunch of windows till almost out of memory, and then decide to print a document. Printing would require a bunch of memory allocations, and maybe your printing code segment had been unloaded and needed to be loaded into memory. There were more paths to low memory conditions than you could possibly anticipate, so out of memory conditions always came as a surprise to your code. It found that out you were out of memory by discovering that a call to NewPtr or NewHandle or GetResource or GetWindow or something like (that should return a Pointer or Handle) that had returned nil. You checked for that right? If not, your program was going to bomb with extreme prejudice when you try to dereference it or pass it into some toolbox call. Even if you were careful about it, and found out about it in a timely way, an out of memory condition meant your program was in trouble. You are going to have to tell the user about this. Maybe you were trying to make a big allocation when things failed, and once you back out of that, there is actually still a reasonable amount of memory left. That would be good, because telling the user he is out of memory is going to require... the allocation of some additional memory. You need to open an alert, which is a resource that needs to be read into memory. If you are really out of memory, even that will fail. At that point you are really screwed. If you are smart, maybe you had allocated an emergency buffer that was not used for anything, but could be disposed to free up some memory in special situations like this. Starting with Multifinder, and getting better with System 7, it became possible to get some “temporary memory” from space outside your program’s partition. Assuming there was some available (there usually was), it could save the day in a memory pinch. But it wasn’t possible to tell the Resource Manager or other parts of the toolbox to use temporary memory when doing its stuff. And I think that some of the toolbox code was not as careful at seeing whether its memory allocations had failed or not. Sometimes, no matter what you did, an out of memory condition meant an ugly crash, data loss, and an unhappy user.

Problems with the Heap of Heaps

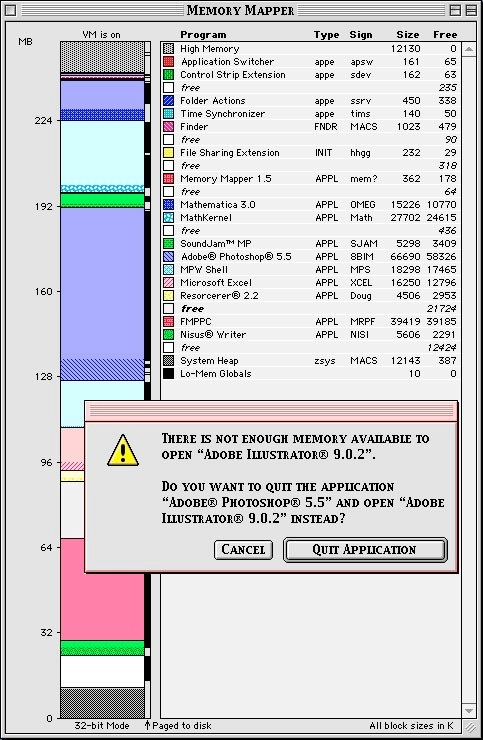

When Switcher and then Multifinder arrived, multiple programs got stacked into the exact same logical memory space. Each program had its own global area, stack and heap, and they got started up dynamically when the user asked them too, in a way not so different from the way memory blocks were allocated in the heap by the Memory Manager. But there was one difference. The Process Manager could not load programs onto the Macintosh memory space as relocatable blocks. Programs never moved. They were loaded into memory starting at the highest location of memory and working down. If the user started up a lot of programs and then quit some of them in anything other than reverse order they were started, this left holes in memory. Programs asked for a particular size chunk of memory when they started up. Actually, they would make an offer to the process manager. They would like to have their preferred amount of memory, but they would settle for anything greater or equal to some minimum size partition (all this was set by the user in the Get Info dialog). If the next program could not fit in any of the spaces available, the Process Manager could not load the program, and would have to apologize to the user, who knew nothing about how he had fragmented the heap. If he quit everything and then started it all back up, it might all fit. Also, if a program had used up all the memory in its partition and needed more, partitions could not be resized while allocated, even if there was adjacent space for them to grow into. So if a program was out of memory, quitting all the other programs didn’t help. This made no sense to users. The whole idea of having to constantly mess around with the memory settings in the Get Info box made no sense. And there was no way to avoid changing the settings. It wasn’t practical to always give 70 MBytes of space to Photoshop, but if you were going to work with some particular set of big documents, you were going to have to know that and ask for a lot of space right from the beginning.

A Fragmented Multifinder Heap, as seen with Memory Mapper 1.5 by R. Fronabarger. There is enough space for Illustrator, but not all in one spot. Virtual memory is on, and some parts of memory are paged to disk, but this doesn’t help. The problem is that the logical addressing space is fragmented.

Multifinder was not anticipated by the original designers of the Macintosh operating system. It was a post hoc solution to add multiprocessing to a single job operating system, and a way to relocate code partitions was not included in the Process Manager. In the end, it was this kind of heap fragmentation that the Macintosh operating system is remembered for, instead of the brilliant way that it solved the classic heap fragmentation problem. Making heap zones relocatable would have solved the problem, in the same way that relocatable dynamic memory solved the heap fragmentation problem within programs. Like relocatable heaps, program memory allocations could have grown dynamically, making the memory settings in the Get Info box unnecessary. This was never done, probably because it wasn’t considered the correct way to solve this. The correct way, of course, was to use a paged memory management system to put each program in a separate logical memory space. The problem arose because all programs shared a common logical addressing space. Although programs have to share the same physical memory, there is no reason why they needed to share the same logical addressing space. Virtual memory, when it was introduced to the Macintosh in System 7, remapped the relationship between logical memory and physical memory, allowing memory partitions to be larger. But the problem with the layout of logical memory was not addressed. Programs were running into each other in the logical addressing space, and virtual memory but did nothing to fix it. Users and computer pundits, of course did not really understand the term virtual memory. To them virtual memory ought to mean that programs did not run out of memory, ever. Apple didn’t fix it for a long time, because they hoped to fix it right, first in Pink, and then in Copland, but neither of those happened. The problem brewed for 10 years, until it was resolved by the introduction of OS X.

-- BG (basalgangster@macGUI.com)

Saturday, January 22, 2011