The Long View

PowerPC

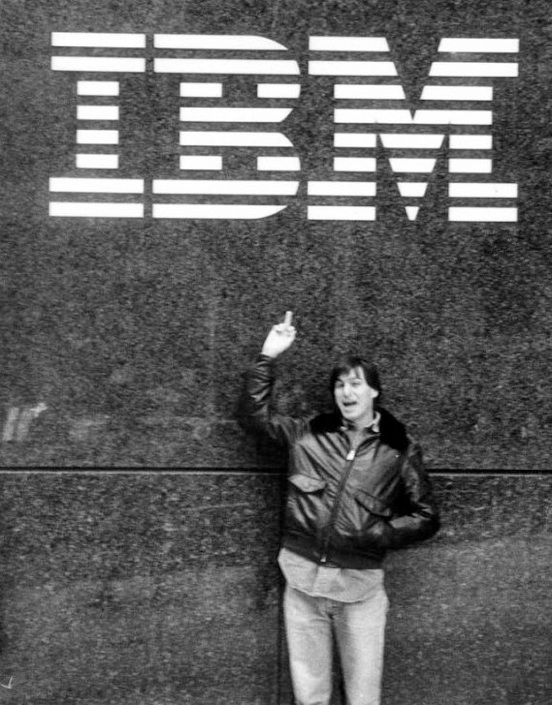

In Apple’s famous superbowl 1984 advertisement, a woman wearing a Macintosh t-shirt throws a hammer through a screen displaying a slogan-spouting big-brother type character whose voice and image is mesmerizing an audience of zombie-like grayscale people. Question: What real life company does this big-brother character represent? Is it (a) Microsoft, or (b) IBM? Of course, the correct answer is (b) IBM. In the 1980’s, Microsoft was not Apple’s enemy. It was IBM. Microsoft was an enemy, of course. They were cloning the Macintosh operating system, stealing the whole thing, but nobody at Apple knew that yet, or at least they acted like they didn’t know it. In the 1980’s, Microsoft was an Apple ‘partner’. They made some of the most successful application software for the Macintosh, and the Macintosh needed application software. Apple was a computer company, whose profit came from selling computers. Microsoft didn’t make a computer, and so was not really a direct competitor. IBM, on the other hand, had been eating Apple’s lunch since 1980 with their IBM PC computer. It wasn’t an innovative or exciting new product. In fact, none of it was really invented by IBM. It was made with off-the-shelf parts and used a Microsoft imitation of an already out-dated operating system made by somebody else. Despite all this, the IBM PC outsold the Apple II.

The IBM PC didn’t succeed because of technical excellence. It sold because it was made by IBM. For decades, IBM had been synonymous with the big mainframe computers used by the US government and large companies. The computer industry had long been dominated by IBM, and everybody in the business world knew the old saw that “nobody ever got fired for buying IBM”. IBM used fear to rule the computer world. When the IBM PC came out, it promised to be the first personal computer that was a real professional machine that would increase your productivity, not just a hobbyist's toy. Of course it wasn’t really any different from the other personal computers available at the time. The success of the IBM PC was based on an illusion, an unfulfilled promise represented by the IBM logo. The famous Apple TV commercial said that the Macintosh would break the hypnotic spell that the name IBM had cast on the personal computer market.

The Enemy of My Enemy

The big-brother commercial reflected the attitude of Apple-founder and Macintosh product leader Steve Jobs. But the Macintosh did not meet sales expectations in the first two years, and Steve Jobs was forced to resign in late 1985. The people who took his place were not computer visionaries, they were businessmen. I don’t know what brand of mainframe computers were in use at Pepsi while John Sculley worked there, but I’d be willing to venture a guess.

In 1985, IBM and Microsoft started work on a joint product, a new operating system for IBM personal computers. It was a Macintosh-like operating system with a graphical user interface, called OS/2. The underlying operating system was announced in 1987, and the graphical user interface part appeared in 1988. The new operating system ran on a new line of IBM personal computers called PS/2.

The generic nature of the original IBM PC made it easy to rip it off, and ripped off it was. Microsoft retained the right to provide the operating system to any and all, including the people who were ripping IBM off. And they did it with gusto. Like Apple, IBM is a computer company, but unlike Apple, their product was basically an illusion. When every two-bit no-name company started making computers and calling them IBM-compatible, the illusion was broken in a way Apple could never do. Customers saw that a no-name IBM clone was actually functionally equivalent to an IBM PC. IBM wanted their next computer project to be harder to rip off. They used some IBM-owned proprietary components, and they wanted to build OS/2 so that it would work only on IBM PS/2 computers. This did not fit into Microsoft’s vision. They wanted to make operating systems that they could license to any and all comers. While working on OS/2 with IBM, they were also perfecting their own Macintosh OS rip-off, Microsoft Windows. The first version of Windows that people were willing to use was version 3.0, released in 1990. Although it was nowhere near as good as OS/2, it ran on existing cheap PC clones, and the IBM spell on personal computer buyers had already been broken. Windows won, and PS/2 with its new operating system lost. In the end IBM went back to making clones of their own previous design. Microsoft leveraged their work on OS/2 for a later project of their own, Windows NT. IBM never recovered their position in the PC market. The partnership between IBM and Microsoft was poisoned.

Apple sued Microsoft over their rip-off of the Macintosh user interface in 1988, and the suit was settled in 1994, in Microsoft’s favor. Apple and IBM, both computer companies, found themselves defeated by Microsoft’s strategy of empowering parasitic small-fry computer companies that invented nothing and lived off the innovations of others. With Steve Jobs gone and John Sculley at the wheel at Apple, IBM was not the enemy any more. And IBM was looking for partners who could help them against Microsoft.

The RISC Movement

The Macintosh line originally employed the Motorola 68000 CPU, the first in what became the m68k line of processors. Apple used the 68020 and 68030 in the Macintosh II line, and the 68040 in the Quadra/Centris line. Motorola was working on the next processor in that family, the 68060, but Apple never used it. Instead they decided to change processors completely. I’ve never heard a definitive explanation for this decision. The performance of the m68k line of processors had kept up with the Intel x86 line of processors used in the IBM PC clones. Intel and Motorola had played leapfrog with the introduction of each new generation, and while Intel usually led Motorola by a little at each step, Motorola processors kept up, and sometimes went ahead of Intel. For example, in 1992, the PS/2 offered an Intel i486 at 25 MHz, and the Quadra 605 had a Motorola 68040 at 33 MHz. Cycle by cycle, the 68040 was the equal or better of the i486. In 1991 and 1992 the processors in Macintoshes were the equal or better of those used by the competition. Why did Apple think it was necessary to change processors?

Maybe Apple was just swept up by the technological trends of the time. For years, there had been a sea change in thinking about CPU design. Both the m68k and the ix86 lines of CPUs were of a type that came to be called complex instruction set computers (CISC). Their instruction sets were designed to be powerful tools for assembly level programmers. Some of the instructions could do amazing things, like doing an arithmetic operation on an entire array of memory locations. Advanced addressing modes allowed instructions to alter values in memory, without first moving them into the CPU’s registers. These complex instructions were executed using microcode interpreters, which translated an assembly language instruction into a sequence of lower level operations. Different instructions and different addressing modes took different times to execute, as they were translated into different number of steps at the lower level. Complex instruction sets made these CPUs a joy to program in assembly language, but most compilers weren’t clever enough to use all of the CPU’s features in the most optimal fashion. Any good assembly language programmer could look at the assembly code made by the average c, pascal, or fortran compiler and immediately see how it could be improved. Assembly language programmers didn’t have much respect for compilers in those days. But most code was written by compilers. Assembly language programming was disappearing.

Also, chip design was moving away from just trying to push to faster clock speeds. They were trying to do more per cycle using pipelining and superscalar design. Pipelining meant that instructions would be fetched from memory and executed in a sequence, rather than one at a time. The various processes that needed to be done by an instruction could be started while the instruction was working through the pipeline in the CPU. Want the pipeline to run smoothly? Make every instruction take the same amount of time, so they move down the pipeline in a nice predictable set of steps. The complex instruction set didn’t work in the pipeline very well. Superscalar design meant dividing up the things that computers do, like integer or floating point arithmetic or memory management, and executing them in different parts of the CPU in parallel. These trends produced a whole movement in CPU design that was built around removing all the nice features (for humans) from the instruction set. The resulting computer designs were called Reduced Instruction Set Computers (RISC). What was reduced was the complexity of the instructions, not their number. In fact, RISC had to use a lot more instructions to do a task, but they were simple ones. If you wanted to add two numbers stored in memory, you had to move both of the two numbers into registers one by one, add them, and move the result back to memory. This process, which took 4 instructions, would have been one instruction on a CISC computer. Programs were longer (because each of these simple instructions has to be stored in the program) and writing in assembly language was torture, but each instruction would take about the same amount of time as any other. And even compilers, it was hoped, would be able to write efficient code using such mind-numbingly simple instructions. The simplicity of the instruction set also translated into fewer transistors in the computer. Chip sizes being measured in transistors, the RISC design could get more processing from fewer transistors, a smaller piece of working silicon, and less heat dissipation. Cheaper computers that use less energy, and go faster because of the parallelism associated with pipelined instructions. Sounds pretty good.

Reduced instruction set CPUs had been used in a limited way for a long time, but in the 1990’s they went viral in the computer industry. Sun introduced their SPARC processor in 1987, offered their first workstation based on the processor in 1989, and over the next few years shifted their line of workstations from m68k to SPARC. Silicon Graphics abandoned the m68k for a RISC processor design by MIPS at about the same time, and HP shifted from m68k to their own RISC design. Even the PC world was thinking about RISC. IBM developed a RISC design, called Power for their line of workstations (but not PCs). Intel developed a couple of RISC designs, called i860 and i960, and for a while considered them possible successors to the x86 CISC family in PCs. They were not backward compatible with the x86 however, and there was little enthusiasm in the PC world (especially among application developers) for abandoning the existing code base and rebuilding the world’s library of PC software for the new processors. Probably under pressure from Microsoft or other customers, Intel instead decided to keep the CISC architecture, and to do the hard design work required to implement pipelining in the old instruction set. No doubt Apple felt that they had to go with this trend to keep up, and that the Motorola 68k family’s days were numbered. Motorola were developing a RISC processor of their own, called 88000, which (like Intel’s i860 and i960) was not compatible with their own previous instruction set. No doubt if Apple had been committed to the 68k, they could have convinced Motorola to continue aggressive support for it. Motorola, like Intel, had already begun to implement RISC-like features in the m68k line. But Apple weren’t committed to CISC and they didn’t try to convince Motorola. Apple at first worked on porting the Macintosh to Motorola 88000, but ultimately they changed course and approached IBM. The real story behind that part of the decision would be interesting to hear. Maybe someday somebody who was there will fill us in.

The AIM Alliance

What we have been told was that in April 1991 John Sculley held a demonstration of Apple’s advanced operating system, called Pink, running on an IBM PS/2 computer, for some IBM engineers. According to the story, this version of Pink (which never actually came to market at all) looked a lot like System 7. System 7 was new in 1991, and at the time of that meeting had not yet been officially released. It must have looked very futuristic to those IBM guys. Not surprisingly, IBM was won over, and agreed to develop a version of their Power processor for the Macintosh, and to cooperate in the development of a host of software products, including Pink itself. I can see why IBM was excited about this. They could hope to get a mass market for their RISC processor family (which at that time sold only in very low volume), and they would get an operating system that could compete with Microsoft. But this deal was billed as a big achievement for Apple. Why? There were lots of RISC processors around. Why build a new one with IBM? Why not use Motorola’s 88000, or SPARC, or MIPS? And why would Apple want IBM’s cooperation on the next version of the Macintosh operating system? I can’t figure any of this at all. Maybe Apple executives were hoping for a bit of that old IBM magic. But the magic was already gone.

The PowerPC was built by an Apple, IBM, Motorola alliance, called AIM and located in Austin, Texas. The processor itself was a new design, including features from IBM’s Power processor and from Motorola’s 88000. Despite Motorola’s involvement, there was no attempt to make the processor compatible with the m68k processor, and so the existing Macintosh operating system and all Macintosh programs were totally incompatible. The first of the PowerPC processors, the 601, was ready to go in 1993. The first Power Macintosh systems, the 6100, 7100, and 8100 were offered in March 1994, and came with the first PowerPC-compatible operating system, System 7.1.2. That was fast. How’d they do it?

The Compatibility Problem

Microsoft wanted to stick with Intel x86 so they wouldn’t have to rewrite their entire operating system. With System 7 just being released, and most of it written in m68k assembly language, Apple decided to do just that. What were they thinking? Apparently, they had been toying with the idea for a while, in a project Darin Adler says was called jaguar (no relation to Mac OS 10.2), or sometimes Red (because it was pinker than Pink). Their solution was to ease into the new processor gently. Old software, and m68k code in the operating system, would be executed by emulation. If the only working part of the PowerPC version of the operating system were the emulator, then everything would work (albeit slowly) immediately. Programmers will have time to convert they code to PowerPC binaries, and the operating system can be converted in parts. If the operating system were to be converted in parts, then at least for a while (maybe for a long while) it would consist of a mixture of m68k and PowerPC executables. To accommodate this situation, it will be necessary to rapidly switch back and forth between m68k code running in the emulator and PowerPC code running native. So there has to be a way for execution state to automatically switch between native and emulated, depending on the nature of the executable code. Switching between m68k and PowerPC code would happen when branching at subroutine boundaries, so branches that require the switch need a special mechanism. That mechanism was called the Mixed Mode Manager. Together, the Mixed Mode Manager and the emulator were almost enough to get started. The other thing that was needed was a PowerPC runtime environment. Why a new environment? Well, for one thing, the old one was really deeply dependent on the features of the m68k instruction set. For example, globals were stored relative to A5. A5 is a register in the m68k. Low memory was low because it could be reached by a 16 bit offset from location zero in the m68k memory space. It would be unnatural to try to keep any of that stuff. And for a second reason, the old Macintosh program environment was old-fashioned. It didn’t adequately distinguish between memory containing code and memory containing data. It allowed modifications to code, and that stuff made it hard to get it to work and play nicely with virtual memory. It was time for a more modern design, and this was the chance to do it.

The PowerPC Program Environment

Chunks of PowerPC code were stored on disk in structures called Fragments. They could be loaded into memory by the Fragment manager. Fragments could contain both code and data. When read into memory, the code and data sections would be separated. If virtual memory was turned on, the code section would not be loaded into the program’s partition, but in memory located above the stack of application partitions. The code section used the program file’s data fork as its backing store (requiring less disk space), and because code could not be altered in memory, swapping code in and out of memory could be a read-only operation and could go much faster. The data stored in a fragment was loaded into the program’s partition, in the heap. If virtual memory was turned off, then both code and data went into the heap. Remember the memory information in Get Info telling you that you didn’t need as large a partition in memory for your program if virtual memory was turned on?

Once loaded into memory, fragments (both the code and data sections) didn’t move. So subroutine calls within the code section of a fragment were easy to resolve. Every fragment had a table of contents, stored in its data section, that contained pointers that could be used to find external references (imported subroutines and data stored in other fragments), to find external (global) data, and to find the data part of the fragment. To find its own static data, code in the fragment used an offset from the beginning of its table of contents. One of the 32 PowerPC registers (general purpose register 2 or GPR2) was used as a pointer to the current fragment’s table of contents, and its could be used as a base address for those data offsets. A program could (and often did) consist of a single fragment. But all programs would certainly make calls into libraries or the Macintosh toolbox ROM (that was implemented as a library), or code-containing resources not part of the program’s code fragment. When that happened, the external routine’s address would also be found via the current fragment’s table of contents. The imported function would be represented in the table of contents by a structure called a transition vector. If the routine being called was in another PowerPC fragment, the transition vector consisted of two pointers, one containing the address of the table of contents of the called routines fragment, and one containing the address of the routine being called. To execute such a branch, called a cross-TOC call, the contents of GPR2 would be saved on the stack, and the register would be set to point to the table of contents of the called routine. Then execution could transfer to the called routine.

Of course, if some PowerPC code wanted to call a routine encoded with the m68k instruction set, this approach would not work. Such calls required a mode switch, to be performed using the Mixed Mode Manager.

The Mixed Mode Manager

No program could be expected to call only PowerPC code. Much of the Toolbox ROM continued to be encoded as m68k for a long time, and other m68k code, such as MDEF resources (containing the code required to draw menus on the screen), and CDEF resources (which did the same for controls) continued to be needed. Drivers, patches, and a lot of other pieces of code were stored in m68k format in resources. So even if your program was all PowerPC, it would still be making mode switches when making calls to these things. Of course, mode switches were required when making the transition back to your code from these m68k routines. Toolbox ROM and other system code made mode switches internally, and these might also execute callback routines in your code. All callbacks, previously registered with the system code using a simple pointer to the callback routine (called a ProcPtr) had to be assumed to involve a mode switch. Of course, you would want to switch modes as infrequently as possible, but this was mostly not under your control. Some of the operating system was PowerPC, some was not. You never knew which was which, and it was always changing as Apple ported more and more of it to PowerPC. There was nothing the application programmer could do about any of that.

Mode switches are complicated. They have to be done by the Mixed Mode Manager. Rather than just loading up the stack and branching to the subroutine, you have to load up the stack, and then call a Mixed Mode Manager call, CallUniversalProc, and pass it a pointer to a structure that describes the subroutine you want to call. You have to create the structure, called a RoutineDescriptor ahead of time. The pointer to the RoutineDescriptor is called a UniversalProcPtr. This produced one of the biggest coding issues confronted by application programmers writing for the PowerPC Macintosh. All callback routines written in PowerPC code had to be assumed to be called from m68k code. Therefore, instead of just using a pointer to the callback routine, you had to create a UniversalProcPtr, initialize it with the address of your callback, and then pass it to system routines that require a callback. Within Apple’s operating system code, they must have had to manage a lot of Mode Switches. But for a normal application program, it was mostly handled for you.

The Quickdraw Globals

This left the quickdraw globals. In the m68k world, they were created automatically, and referenced relative to A5. In the PowerPC program space, every program was expected to allocate space for them in its own heap. In addition, the Process Manager created a Mini-A5 world, that could be used by m68k code running in the context of a PowerPC program. All this was just enough to satisfy the emulator.

The m68k emulator was great, but it wasn’t perfect. For one thing, it did not include emulation of the floating point processor. Code that accessed the floating point processor would not run in the emulator. Of course it was slow, and m68k code running in the emulator on PowerPC did not see any of the promised performance benefit.

Transition to PowerPC

This clever design gave programmers time to move their programs to PowerPC, and gave Apple time to gradually move all the various parts of the operating system to it as well. Apple took advantage of this, by only gradually removing all the m68k code. In System 7, only a small portion of the operating system was PowerPC native, but the proportion grew over time. Apple said they were porting the most commonly used routines, the ones that made the most performance difference, first. Looking at the System file for System 8.6, I can still find a lot of m68k code, in CDEFs, PACKs and DRVRs for example.

PowerPC Performance

Was the move to PowerPC a good one? Obviously it was a bad move to get involved with IBM. Almost everything Apple did with them turned bad. But how about the original goal of switching to a RISC processor?

Firstly, RISC didn’t really pay off in a big way for anybody. Most companies who staked their future on the idea that RISC could outperform Intel x86 and give them the stuff they needed to outcompete Intel are gone now, and Intel remains. DEC, Sun, Silicon Graphics, have all failed and gone. IBM, Apple and HP remain. IBM is no longer competitive in the PC market. Apple and HP have given up RISC processors, and switched to Intel x86. So why wasn’t RISC, and specifically PowerPC faster, cheaper and cooler? Actually, they were, at least at first. The early PowerPC processors did have an edge on Intel x86 in every category, speed, cost, and thermal. To keep up with RISC processors, Intel added pipelining and superscalar design to the x86 processors, despite their complex instruction set. This was difficult and complicated, and caused their transistor count to go up, raised the cost, and heated things up some. But once this price was paid, future expansions of the x86 line did not add to the burden of maintaining the instruction set. As RISC and CISC chips got bigger and more complicated and faster, they did so in parallel, and the price paid for maintaining backward compatibility of the x86 instruction set, once paid, shrank in proportion to the total chip design. Over time, the RISC advantage faded away. What gave Intel the advantage wasn’t speed, cost, or energy dissipation. It was compatibility. To keep their compatibility, Apple had to run legacy portions of their operating system in emulation. The performance cost of this also reduced over time.

The PowerPC transition modernized the Macintosh operating system. It gave system programmers the opportunity to improve support for virtual memory, and move in the direction of more modern memory management. Also, the PowerPC processors, while they did not move way ahead of Intel x86 as hoped, did provide Apple with a very powerful CPU family that kept a little ahead of Intel all the time. This was not appreciated, because Intel kept clock speeds a little higher than PowerPC throughout most of the period, and benchmarks were too difficult for the computer press, and the public, to understand. The power utilization advantages of the PowerPC were especially evident in the Macintosh powerbooks, which for a long time were more powerful and had longer battery life than their Intel-inside competitors. Despite all this, Apple lost market and mind share during this period, not because of hardware, but because of software. Apple was unable to offer something perceived as better than the Windows operating system, starting with the introduction of Windows ’95, through the end of the decade. These problems were not the fault of Macintosh hardware, which was the equal or superior of that used on Windows machines during that entire period.

The End – Finished With IBM

The high point of the PowerPC era occurred 2000-2005. During this time, Intel was struggling in a commodity market with AMD, and the AIM alliance produced the most advanced processors available on personal computers. With the release of OS X, and a series of application software triumphs, Apple had an undeniable software advantage over Windows. This combined with superior hardware to power the incredible Apple rebound. PowerPC 750 (called G3 by Apple) processors were released in 1997 and were used through the end of the decade. The low power versions powered the famous Wallstreet, Lombard and Pismo powerbooks, which are considered classics (by anyone who knows about stuff like that), and are still used and praised, over a decade later. The G3 achieved clock speeds up to 500 MHz. The PowerPC 7400, designed by Motorola and IBM but manufactured only by Motorola, added a vector processing unit, called AltiVec. This gave it a big boost in benchmark performance, but most applications didn’t use this capability. G4’s achieved speeds up to about 1.7 GHz in powerbooks. The G4 was replaced with IBM’s PowerPC 970, called G5 by Apple, in 2003. Dual, and even quad core versions of these were sold, at clock speeds that made it to 2.7 GHz. It was a 64 bit processor and included the AltiVec vector processor. This advanced processor dissipated a lot of heat, and required special cooling, but it powered extremely powerful Macintosh desktops till discontinued in 2006.

Steve Jobs announced the switch from PowerPC to Intel in June 2005, at the Developer’s Conference. He revealed that OS X had been running on Intel at Apple all along, and it would not take long to make the switch. By the end of 2006, Intel desktops and notebooks were available from Apple.

The stated reason was the failure of IBM to make a promised G5 running at 3 GHz, and the absence of a low power version of the G5 that could be used in powerbooks. Low power G5 chips were announced by IBM in July 2005, one month after the Intel announcement. Certainly Apple had been informed about that at least one month earlier. There were 3 GHz MacPros available for a little while, but it seems to me unlikely that either of the reasons stated had much at all to do with the decision. Partnership with IBM was the last vestige of the bad old days at Apple.

Five years later, what is the clock speed of your Macintosh?

-- BG (basalgangster@macGUI.com)

Monday, December 20, 2010